One-Dimensional Characters

As an author, as you continue to write and gain experience (attempting, say, mastery of your storytelling craft), a typical goal is to create fewer and fewer one-dimensional characters in your stories. As you grow as a writer, you tend to attempt more and more deeply fleshed-out characters.

Which just makes it all the more jarring as you encounter one-dimensional characters IRL or online.

#Politics

#MediaLiteracy

#Life

[h/t Trav]

Science Fantasy: A Genre out of Time

NOTE: cross-posted from markharbinger.substack.com

I.

Literary genres are used to categorize and condition both the works and the readers. In general, I think this is an unhealthy reflex. I mean, sure, it’s good short term business for Amazon to carve up audiences into predictable markets—each of whom looking for the same, equally predictable, tropes within their stated genre preferences. But if that’s as far as it goes I worry about the long-term effects on the art itself.

Thanks for reading The Kiosk at the Coffeebeat Cafe! Subscribe for free to receive new posts and support my work.

Art is always a dance between artist and audience—shared meaning, negotiated and mutually reinforced. And at that level, there isn’t anything wrong with expectations/tropes being in play. Conventions are necessary. After all, if I’m reading a romance and suddenly there’s a vampire, well, that can’t work, can it? (Just kidding Twilight fans. Seriously, more on monsters and where they fit into this in a minute.)

But you don’t have to look very far to see genres being used to balkanize. In genre discussions on writer’s fora all over the internet, the battle lines are drawn with gusto. Star Trek, Star Wars, and/or Dune are ‘pure fantasy’ for indulging in contrivances like faster-than-light travel (FTL), or psychic abilities. Hell, even within Science Fiction, this instinct exists: I mean, show out, already: is your Sci-Fi “Soft” or “Hard”?

Full disclosure: I’ve never understood that one. Not only is there a futuristic rebuttal (eg, Clarke’s Three Laws) to any ultra-dogmatic hard science fiction approach, but you don’t need to go very far back into actual history to see examples where our hard science of the time was completely (and sometimes, hilariously) wrong. No doubt some of it is now, too.

Nonetheless, a lot of Sci-Fi fans seem to think the discovery elevator has stopped at their floor. I think this is in contrast to the work of most actual scientists. The entire ‘scientific method’ is based on humility about how little we actually know, as it sets out to prove only what we don’t. Plus, bleeding edge scientific theories (that’s right, I’m looking at, er, not quite able to look directly at you, Quantum Physics) are pretty fantastic.

Amazon lists about fifteen different sub-genres each for Sci-Fi and for Fantasy. But, nowhere to be seen is Slipstream (remember that one, Gen-X SF/F fans?) or, my personal favorite, the genre out of time: Science Fantasy.

II.

If genres really are just checklists of tropes writers need to check off in order to match reader expectations, then Science Fantasy (SCIFAN) is pretty easily defined: a story with a mix of both Sci-Fi and Fantasy tropes.

So, if it’s that simple, and if Sci-Fi and Fantasy are just a venn diagram of trope clusters, why hasn’t SCIFAN caught on? Why did it disappear as a genre?

Or did it?

The history of how the underlying genres have competed for our attention is telling. If you are comparing lineage, the winner is clear: Per Stephen R. Donaldson: “all the oldest and most enduring forms of literature in all languages on this planet are fantasy.”

But by 20th Century, with our newfound mass displacement resulting from machines—not to mention our wacky new ability to end the human race with nukes—Science Fiction rose to answer the call. After all, if science got us into this mess…

In the 1930s, under John W. Campbell’s leadership, a magazine called Astounding Stories of Super-Science (what we now know as Analog Science Fiction and Fact) ushered in the era of pulp science fiction. For example, Asimov’s classic Foundation Series began as a serial in that mag. But this was only an interesting ancillary to the already established field of pulp fantasy (think: The Shadow, or Conan the Barbarian, or the works of H.P. Lovecraft).

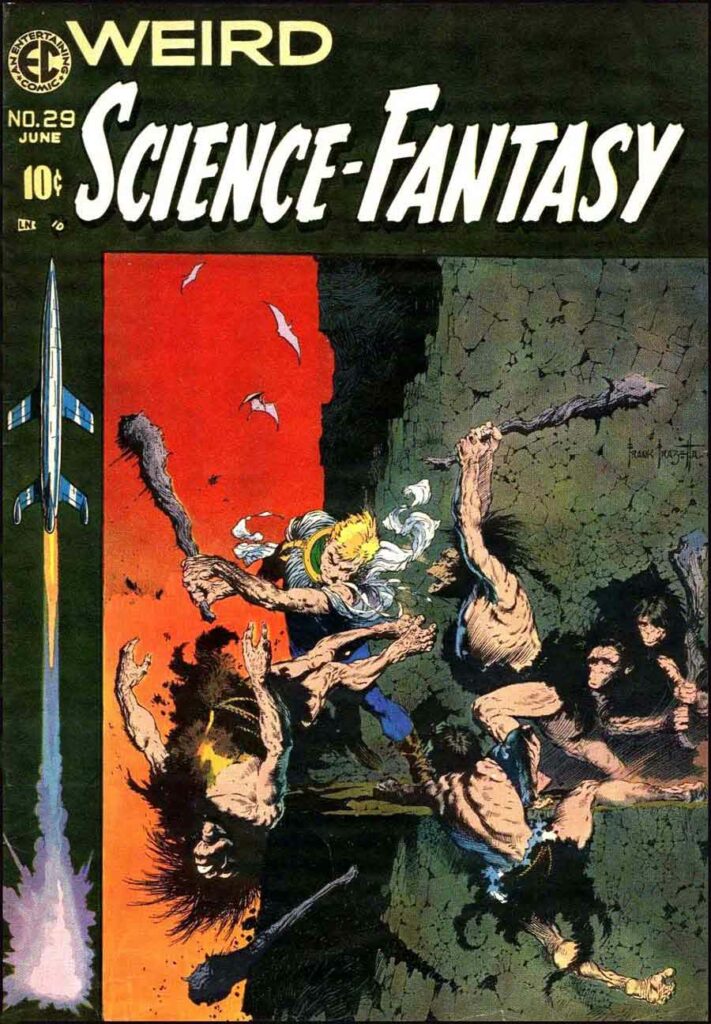

By the 1950s, Sci-Fi reached its peak. One cultural touchstone: EC Comics branched out from its better known and more profitable horror titles, and started two new comics: Weird Science Fiction and Weird Fantasy. It’s worth noting that the lines were very much blurred between the two regarding what sort of stories you could expect to find in each. The first issue of Weird Fantasy featured stories about: a cyborg, time-travel, telepathy, and finally a story about astronauts landing on a strange world (Spoilers: that place turns out to be…future Earth! A reveal that Rod Serling reportedly lifted, pretty wholesale, 16 years later in his screenplay for what became Planet of the Apes).

By the 1960s, Fantasy reasserted its dominance in book marketing with The Lord of the Rings (50 MM copies sold as of ’03); while Dune was probably the standout science fiction story (but had only sold about 20MM as of 2015). Overall, science fiction still sells only a fraction as well as Fantasy. And, throughout, the genres seem to be mostly defined by their outstanding tropes, more than any tangible plot lines—but with plenty of overlap. For every hard and fast rule, there seems to be a weighty counter-example.

When I was growing up in the 1970s, science fiction was shorthand for ‘stories about the future.’ This was part of why the opening crawl of Star Wars in 1977 was so subversive: “A long time ago in a galaxy far, far away.”

In his foreword to Weird Science: The EC Archives — Volume 1, George Lucas says: “I often wonder if kids today can experience life with such a sense of discovery and excitement…Weird Science, Weird Fantasy, and Tales from the Crypt grabbed you from the start and absolutely refused to let go…EC Comics had it all: rocket ships, robots, monsters, time travel, and laser beams…It’s no coincidence that all of those are also in the Star Wars movies.”

III.

But, clearly, there need to be limits. Guide rails. Boundaries. As freeing as all of this cross-genre business can be, we can’t be deliberately confusing readers. How can this freedom be contained and channeled into better storytelling?

Well, what if, instead of analyzing these genres by their cosmetic trappings, we look a bit deeper. How do Sci-Fi and Fantasy differ in what they say about us and our relationship with the universe?

Fulbright Scholar and Professor Emeritus Carl Malmgren—in his 1988 paper: “Towards a Definition of Science Fantasy”—defined SCIFAN as stories with worlds (or characters!) with “at least one deliberate and obvious contravention of natural law or empirical fact, but which provides a scientific rationale for the contravention and explicitly grounds its discourse in a scientific method and scientific necessity.” This means the ‘science’ in SCIFAN refers to the “attempt to legitimize situations that depend on fantastic assertions.“

What, then, of magic? Ted Chiang said this about the apparent overlap of the underlying genres: “I think magic is an indication that the universe recognizes certain people as individuals, as having special properties as an individual, whereas a story in which turning lead into gold is an industrial process is describing a completely impersonal universe. That type of impersonal universe is how science views the universe; it’s how we currently understand our universe to work. The difference between magic and science is at some level a difference between the universe responding to you in a personal way, and the universe being entirely impersonal.”

So, with these formulations in mind, a magic system that is completely explained for all the characters but one—say, a chosen one who is able to do things no other wizard can—is fantasy. But, if midichlorians, or some other fully-fleshed out system, even if it is still magic, is the explanation for everyone across the board, that’s SCIFAN. Likewise, if everyone can use FTL, psychic powers, or fairy dust for that matter, that’s also SCIFAN.

For this reason, time-travel stories are almost always going to feel more comfortable as SCIFAN stories. In most every time-travel story, there’s a specific way outlined to do time-travel. But, what about precognition or time-travel via magic? Now, we’re back to Chiang’s formula: Stories where folks can learn (that’s key) to magically do such things (ie, Star Wars, Doctor Strange in the comics) are SCIFAN.

The exception that proves the point is Audrey Niffenegger’s The Time Traveler’s Wife. Only one character time travels. And no explanation is ever given, other than its analogy to a disability. That classic really is pure Fantasy.

And to back all the way up—if no natural laws (as we understand them now) or empirical facts are being violated in the story at all in the first place, we default back to Sci-Fi.

So how does that help us as writers?

Sci-Fi deals with the unknown. Fantasy deals with the unreal.

At its best, SCIFAN should then help us to both i) question what we think we “know” (transcending dogmatic certitude with all its pitfalls); and ii) bolster our courage against (and somehow achieving peace with) the unknowable.

In other words, to write Science Fantasy it might help to think of which characters are in conflict with the one (on the right track, but not knowing enough) and which are in conflict with the other (no idea what track they should be on).

IV.

/// Okay. But what about monsters? You promised me monsters. ///

You bet! Look, any monsters in your story are the dependent variable. So, take, for example: zombies. The zombies created by science (as in a Sci-Fi story) are ultimately just an obstacle—even if an existential one—for the protagonists to overcome (think: The Walking Dead); whereas the zombies in Fantasy stories whose source is beyond explanation (think: Night of the Living Dead) aren’t just existential, they’re a spiritual question. Those zombies beg the question: What did we do to deserve this? That’s a very different type of story.

That’s also where fantasy and horror could be seen as opposite sides of the same coin. If Fantasy is the yin, a story of how a human can rise up to meet an unknowable, unfriendly (or at least, impersonal) universe—then Horror is the yang, a tale of how hopeless that is. It’s no use. Even if you kill the big, bad shark, you’ll just never know why or how it was set upon you. Deal with it.

Another fun thought experiment is to look at religion through this lens. Sure, the religious trappings, (avatars of good and evil, prophets, etc.) took Stephen King’s The Stand from a tale of a Sci-Fi pandemic all the way into dark Fantasy. But what about a story of a religion based entirely on a practice of learning that anyone could do—like Buddhism? For storytelling purposes, would I then work through that as SCIFAN?

V.

At its zenith in the sixties and seventies, SCIFAN’s greatest examples (the aforementioned Dune or Star Trek) were mislabeled (in our Cold War hangover) as science fiction.

But, even so, at least SCIFAN was already an accepted genre.

Now, it’s largely gone.

My theory is, as our current multi-pocalypse (global warming, pandemics, algorithms/AI, and, hey, nuclear is still there, finger zaps) plays out, the old formulation about how science will need to get us out of this mess will wear thin. We’re entering another era like the Sixties or the Industrial Revolution. Social strife. Upheaval. Spiritual isolation.

I’m looking for the pendulum to shift. If our solutions must come from someplay deeper within ourselves, so, too, must our storytelling.

If my scenario comes true and Science Fantasy does jump forward through time to reemerge in its rightful place, it’ll be interesting to see what sort of explanations we come up with for how it happened. And why.

When it does, you can be sure I’m going to point back to this prophecy essay.

There are Different Kinds of DystopAIs

The instinct with educators is to use any new tool tech/media tool right there, in the education setting. This is to introduce it to students, assess its usefulness. For #MediaLiteracy educators, this would seem to be even more important.

This is certainly true with AI. A popular motif that seems be emerging is to treat AI bots as both reference source and as prototype student. First you ask AI for an answer (AI as reference), and then you cleverly ask AI to fact-check its own work (treating AI the way you would a clever student, for demonstration purposes).

Since AI routinely invents facts—what is commonly known as the “hallucination problem”, it isn’t valid as a reference tool. For example, scientist Gary Marcus (AI expert who testified before Congress) recently shared a funny example of this, when a friend of his, Tim Spalding (founder of LibraryThing), asked ChatGPT just last week for a bio of Gary Marcus. The bot inserted right into the middle of the answer: “Notably, some of Marcus’s more piquant observations about the nature of intelligence were inspired by his pet chicken, Henrietta.” Gary, of course, confirms this is all random nonsense. There is no chicken, no one named Henrietta (at all), etc.

Also, because it isn’t a true algorithm (where the same input will always result in the same output), but rather an ‘black-box’ machine learning whose output is somewhat randomized, that also makes it unreliable. So generative AI is neither valid nor reliable. In other words, a perfect tool for disruption but wholly inadequate in terms of being either a source of information or to be a quality control mechanism for that same work.

But, it gets even weirder when educators and others ask AI for its opinion about something.

At first blush, this seems safer ground. The Oxford definition of “opinion” is “a view or judgment formed about something, not necessarily based on fact or knowledge.” Okay, so AI is like an idiot savant—able to process and analyze staggering amounts of information, but still often get basic things factually wrong—so, maybe this is more appropriate framing? Why not give this a go? After all, with humans, we often ask for opinions with the facts are somewhat hazy. We trust certain people with a sort of instinctual ability to zero in on the key issue…to jump straight to the punch-line of a complex situation. At first blush, that seems to be a reasonable approach.

But, if opinions aren’t one-hundred percent based on empirical knowledge, what are they based upon?

Human opinions are based on feelings, attitudes, value judgments and/or beliefs. And these are all things that we develop through a myriad of interactions with the real world. But that, interaction and learning from the real world is precisely the weakest point of the AI ‘learning’ model. In point of fact, AI doesn’t learned at all from interacting with the real world. It’s just been built with a complex set of relationships between words and concepts, overlaid onto a massive amount of language data from the internet. And this is all so that it can predict what the proper next word in its response should be: what has become known as a “stochastic parrot”.

In another of his Substack newsletters, Marcus again points out this by citing a recent paper called: “The Reversal Curse: LLMs trained on ‘A is B’ fail to lear ‘B is A’” And the results are just what is says. For example, If the LLM was traiend on the fact that Tom Cruise’s parent is Mary Lee Pfeiffer, it cannot answer who Mary Lee Pfeiffer’s son wasy. In human parlance, what we would say about a child who couldn’t answer the latter question is: it isn’t really learning.

To ask AI for an opinion is to just anthropomorphize the AI, no more no less. You’re not tapping into a new source of insight. It only works to cover up that same lack of validity and reliability by falsely imbuing the it with human characteristics.

My opinion is: Opinions—and really mission-critical answers for any question—would still seem to be best served by humans.

While I do agree that, mid- and long-term there are real dangers from underestimating AI (please do google “The AI Dilemma” from the Center for Humane Technology), Short-term, special care should be taken to not over-estimate AI.

People like to say, “it’s just a tool.” But, it may not even be that. Rather, it could be the means by which each of us is made into a ‘tool’, if we’re not careful. Media Literacy educators, especially, could be helpful in mitigating that.

I’m Getting Verklempt…

…Go on, talk amongst yourselves.

I’ll give you a topic: Open source #AI algorithms are neither “open source” “AI” nor “algorithms”. Discuss.

Jesus (progressive liberal)

“The King will reply, ‘Truly I tell you, whatever you did for one of the least of these brothers and sisters of mine, you did for me.’”

—Matthew 25:40 NIV

Hidden Gems

“Rain and Tears” from Aphrodite’s Child (1968), featuring a young Vangelis.

Actually, it’s about 15 years before they knew how to make music videos…

“In the Year 2525” from Zager and Evans (1968). This topped the charts during both the Moon Landing and Woodstock.

DystopAI

As a director for a media literacy non-profit who is in fairly regular contact with a couple big-tech/media leaders on the front lines in the AI field, I’ve been deep-diving into this for months, now, and here’s where I’m at:

I believe the proper analogy is: AI is crypto, social media algorithms, and nuclear tech all rolled into one.

~~~

Like crypto, the positives are over-hyped (in the case of AI, that’s due to the hallucination problem, which will require human oversight for anything important). But, the similarity is striking: in its current iteration, there turns out to be very few good use-cases, really…except to facilitate crimes. I’ve been told the answer to this (y’know, for self-driving cars or medical diagnoses) is to have, non-AI algorithms to supervise and quality control all AI decisions.

Uh, okay. But where do humans fit into all of this again? Tell me again how much of a hit in the job market this will cause and why it’s worth it?

Like Social Media algorithms, a really robust regulation regime might possibly leverage it into some real positives; but, instead, untested and unregulated prior to rollout, it will accelerate the deterioration of society (genocides and political lurching toward authoritarianism, genocides in places where institutions are already weakened, all Art will continue to regress toward the mean, and negative mental health outcomes will continue to spike…all while we continue to largely ignore other existential problems like Global Warming and Pandemic readiness).

And like Nuclear Tech—due to its sheer power, emergent ‘theory of mind’ properties and exponential growth—the downside is existential and probably no upside would really be worth it, anyway.

But, the genie is out of the bottle, so that’s where we’re at.

The (I think, obvious, and only truly ethical) answer is to use the “Precautionary Principle” and stop it as much as possible until its tested and a robust international regulatory regime is in place.

Meanwhile, AI tech leaders are asking for regulation even while they race to roll it out: Fingers Crossed, indeed.

No, no, no, no…

I was just recently introduced to the word “theytriarchy”.

And I must thank whoever showed it to me; because, I feel I have, now, been exposed to, in fact, the dumbest ****ing word ever created.

BOUNCING BACK

In a pre-Substack blog post last year , I set out the two big projects that The Fell Beast (the working moniker for my writing practice) would encapsulate: a suite of short stories and a sequel to my novel.

Then, over the holidays, my mom died—and my mind set about reshuffling the deck, until I was (once again) ready and able to deal the cards.

Suddenly, my writing brain was broken, except for poetry. Short stories sputtered and faded from consciousness. And the sequel was stalled, altogether. My writing practice became semi-random ministrations. In fact, as I look back, those efforts were more akin to menstruations—failing to give birth to something, I was left to periodically expel what I could.

But, times change. Progress pretends to happen. Or we happen upon some. Either way, recently, I’ve come to realize that the two projects I had planned were really just one project, a project that I was over-complicating. And so the sequel will be a mosaic novel.

Meanwhile, my poetry is still a thing (notice the elegant word choice—yeah, that’s right, I got game). As I mentioned in my Substack, a couple pieces have already been excepted by a journal for 2024.

And I’ve even been able to generate a couple of nifty, new short story ideas that are tentatively standing on their own like newborn foal.

The Fell Beast has awakened.